NVIDIA

What Is an AI Factory?

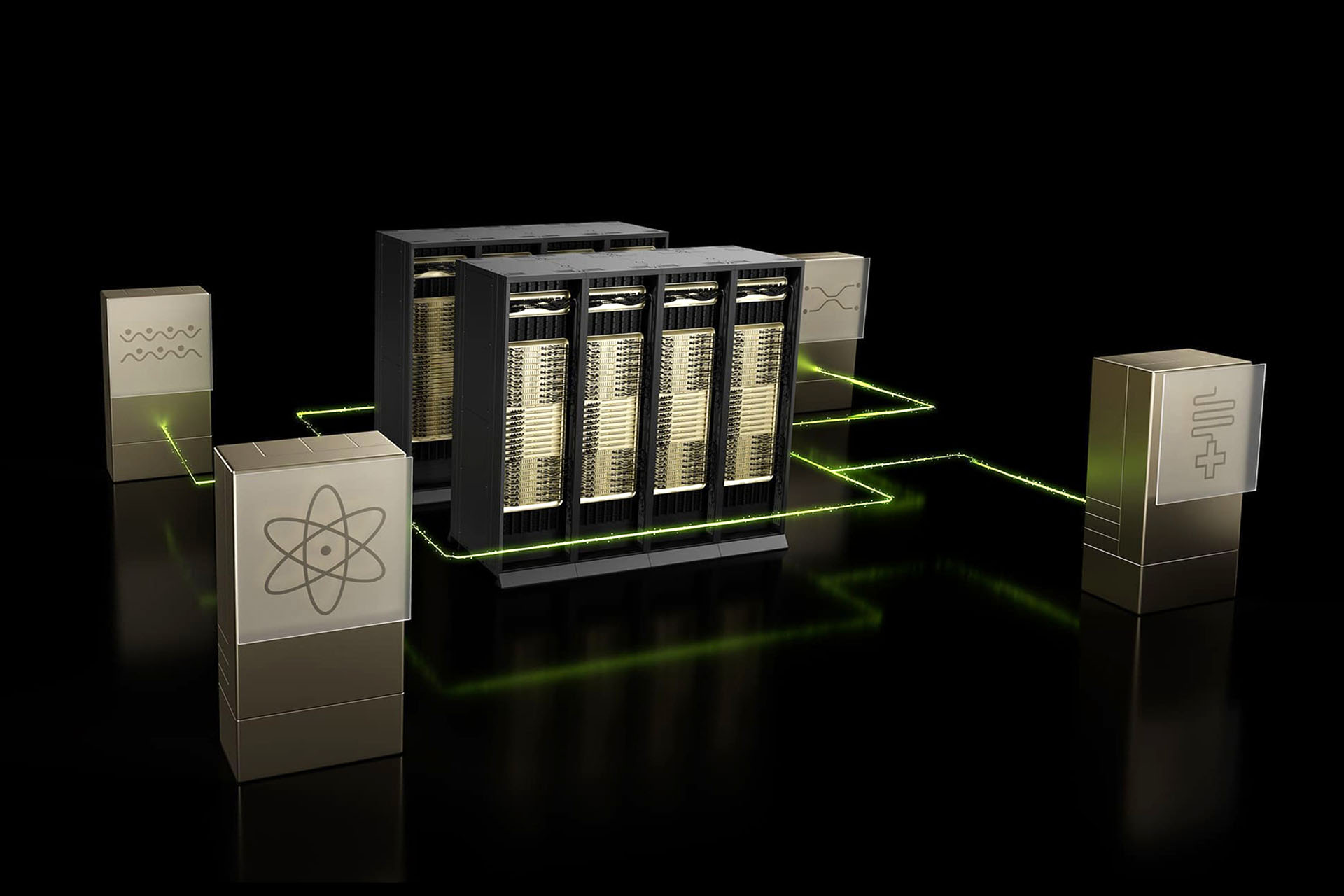

An AI factory is a purpose-built computing environment that transforms data into intelligence by managing the full AI lifecycle—from data ingestion and model training to fine-tuning and large-scale inference. Its core output is intelligence, measured by the volume of tokens processed, powering decisions, automation, and the development of new AI solutions.

Unlike general-purpose data centers, an AI factory is purpose-built for artificial intelligence workloads, focusing on high-performance AI inference and energy efficiency. It operates through a series of interconnected processes and components designed to optimize the creation and deployment of AI models, managing each stage of the AI lifecycle to ensure that data is efficiently transformed into actionable intelligence.

in-depth Overview

How an AI Factory works?

Scaling LLMs Starts With Building Data Pipelines

Data pipelines form the strategic backbone for developing intelligent, safe, and scalable large language models (LLMs). They are critical to LLM success, transforming raw, unstructured data into high-quality, structured tokens that models can learn from effectively. Since high-quality data underpins modern intelligence, a well-designed pipeline ensures data cleanliness and consistency across datasets, directly shaping model behavior at scale.

AI Inference Across the AI Factory

AI inference is a vital, iterative process within the AI lifecycle, enabling trained models to generate real-time predictions and decisions. In an AI factory, inference powers applications such as personalized recommendations, fraud detection, autonomous navigation, and generative AI. Full-stack inference infrastructure ensures low-latency, cost-efficient performance across cloud, hybrid, and on-prem environments. As AI reasoning models require repeated inference and increased compute, the AI factory continually optimizes for throughput, latency, and efficiency. These inference outputs feed back into the system, creating a data flywheel that enhances model accuracy over time and drives scalable, intelligent automation across industries.

Testing and Evaluation with Digital Twins

Digital twins in AI factories allow teams to design, simulate, and optimize every aspect of a facility within a unified virtual environment before construction begins. By consolidating 3D data from various systems into a single simulation, engineering teams can collaborate in real time, instantly test design modifications, model potential failures, and validate redundancy plans. This approach streamlines planning, minimizes risk, and speeds up the deployment of next-generation AI infrastructure.

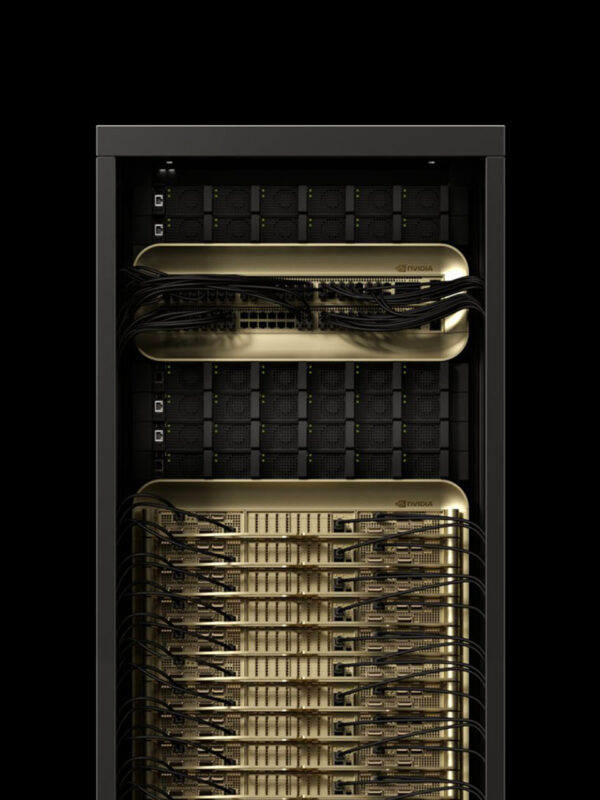

Full-Stack AI Infrastructure

AI infrastructure requires a combination of hardware and software to enable smooth AI deployment and operations. Hardware includes high-performance GPUs, CPUs, networking, storage, and advanced cooling systems, while the software is modular, scalable, and API-driven, integrating all components into a unified system. Built using enterprise-validated designs and reference architectures, this integrated ecosystem supports continuous updates and scalability, helping organizations grow alongside AI advancements.

Automation Tools

Automation tools help minimize manual work and ensure consistency throughout the AI lifecycle, from hyperparameter tuning to deployment workflows. This keeps AI models efficient, scalable, and continuously improving without delays from human intervention. These tools are critical for maintaining the high throughput and reliability needed for large-scale AI operations.

AI Factories

Redefining Data Centers and Enabling the Next Era of AI

NVIDIA and its ecosystem partners are building AI factories at scale for the AI reasoning era — and every enterprise will need one.

Know How

Benefits of an AI Factory?

Transform Raw Data Into Revenue

AI factories convert raw data into actionable intelligence that can be used to drive business decisions and generate revenue.

Optimize the Entire AI Life Cycle

From data ingestion to high-volume inference, AI factories streamline and optimize every step of the AI development process.

Boost Performance per Watt

Purpose-built with accelerated computing, AI factories are designed to handle compute-intensive tasks, providing significant performance and energy efficiency improvements for agentic AI and physical AI workloads.

Scale AI Deployments Efficiently

AI factories are built to enable efficient scaling up and scaling out of both sovereign AI infrastructure and enterprise AI infrastructure.

Provide a Secure and Adaptive Ecosystem

They offer a secure environment that supports continuous updates and expansion, allowing businesses to stay current with AI advancements.

Real-World Impact

Industry Use Cases for AI Factories

The versatility of AI factories means they can be leveraged across nearly any industry for AI-driven innovation and efficiency. Some standout examples include AI initiatives in the public sector, automotive, healthcare, telecommunications, and financial services industries.

AI as National Infrastructure

AI will become a core national utility like water or telecom. By investing in sovereign AI factories, governments can boost economic growth, drive research, address societal needs, build local language models, and secure leadership in the global AI landscape.

Drug Discovery & Personalized Medicine

In healthcare, AI factories speed up drug discovery and personalized treatments by analyzing massive datasets. Generative AI helps design new drug molecules and protocols, leading to better patient outcomes and lower costs.

Secure Financial Services

AI factories give financial institutions the hardware, software, and tools needed for AI-powered applications, including fraud detection, banking support, and algorithmic trading. They provide the computing power needed to drive secure, intelligent financial services.

Advanced Robotics & Autonomous Vehicles

AI factories power robotics and autonomous vehicles with high-performance computing and real-time data processing. They enable fast, accurate decisions, continuous learning, and safer systems while automating manufacturing to cut time and costs.

Telecom Network Efficiency & Customer Service

Telecoms use AI factories to enhance network efficiency and customer service. For example, Telenor launched an AI factory to drive adoption and sustainability. AI factories reduce downtime, optimize networks, and deliver personalized customer support through LLMs.

Deployment

Where to Deploy AI Factories

AI factories provide flexible deployment options, allowing organizations to leverage AI where it delivers the greatest impact. They can be deployed across multiple environments. Let’s explore what these are

On-Premises

Full control over data and performance, ideal for high-security and high-performance needs.

Cloud

Hybrid

Combines on-prem control with cloud scalability for optimized cost, performance, and compliance.

Know More

Frequently Asked Questions

The versatility of AI factories means they can be leveraged across nearly any industry for AI-driven innovation and efficiency. Some standout examples include AI initiatives in the public sector, automotive, healthcare, telecommunications, and financial services industries.

How can I get started with an AI factory?

NVIDIA offers a fully integrated, optimized platform to build AI factories that power the next wave of AI innovation.

What is the NVIDIA Enterprise AI Factory Validated Design?

It’s a full-stack, validated design that helps enterprises build and deploy their own on-prem AI factories with confidence.

Why are high-performance GPUs important?

They provide the compute power needed to train complex AI models efficiently.

What is NVIDIA NVLink and NVLink Switch used for?

These high-speed interconnects enable fast communication between multiple GPUs, essential for large-scale AI workloads.

How does networking work within an AI factory?

NVIDIA Quantum InfiniBand and Spectrum-X™ Ethernet deliver robust, efficient networking for seamless data transfer and communication.

What does the full-stack AI inference platform include?

It features NVIDIA TensorRT for high-performance inference, NVIDIA Dynamo for workflow optimization, NVIDIA NIM microservices for easy deployment, and a data flywheel for continuous learning.

How does NVIDIA Omniverse help with AI factory design?

NVIDIA Omniverse provides a digital twin platform to design, test, and optimize next-gen AI data centers before physical deployment.